Tencent open-source HY-Motion 1.0, marking a significant step in the democratization of artificial intelligence tools for motion generation. By releasing the model to the public, Tencent aims to accelerate innovation in areas such as animation, gaming, robotics, and virtual reality, while strengthening its position in the global AI research community.

The move reflects a growing trend among major tech companies to open-source foundational AI technologies to encourage wider adoption and collaboration.

Tencent Open-Source HY-Motion 1.0 to Expand AI Research Collaboration

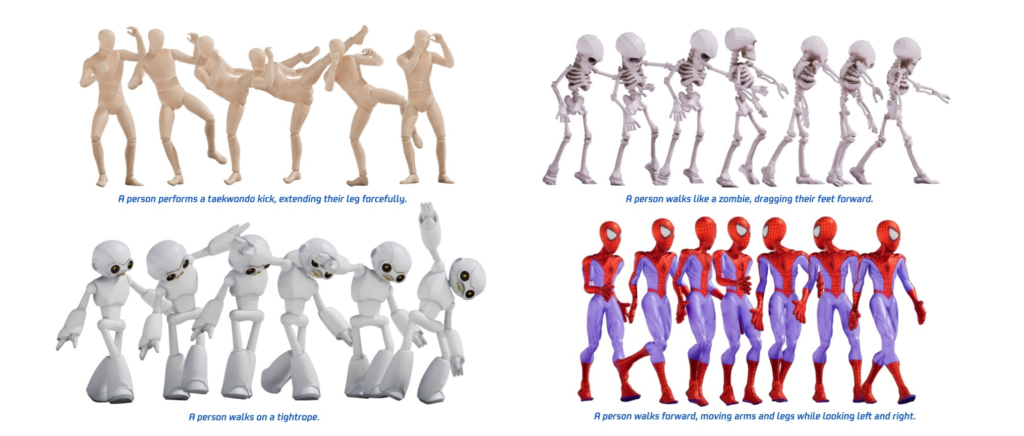

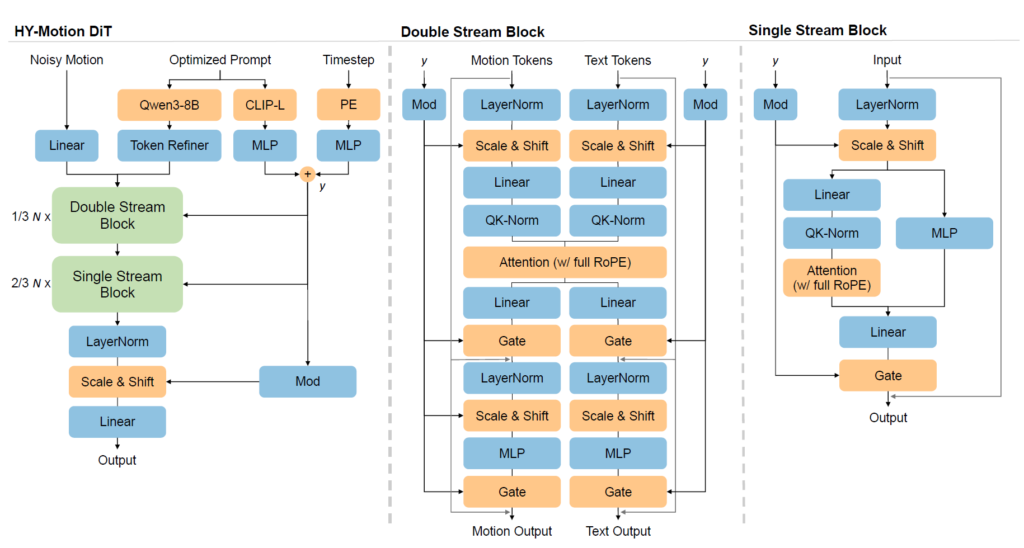

The announcement that Tencent open-source HY-Motion 1.0 confirms that the company has made its advanced motion-generation model freely available to developers, researchers, and startups. HY-Motion 1.0 is designed to generate realistic human motion based on text or structured inputs, enabling more natural movement in digital characters.

By open-sourcing the model, Tencent allows the global developer community to test, modify, and build upon its technology, potentially accelerating breakthroughs across multiple industries.

What HY-Motion 1.0 Brings to the AI Ecosystem

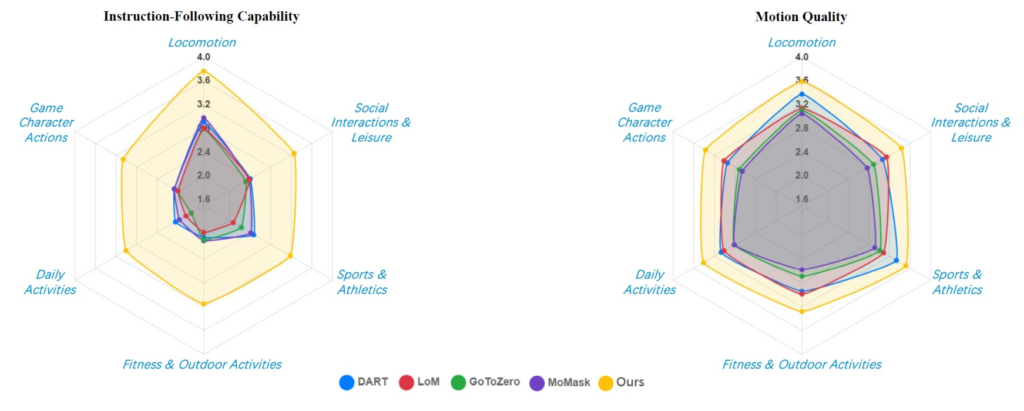

When Tencent open-source HY-Motion 1.0, it introduces a powerful tool capable of producing smooth, physics-aware motion sequences. Such models are increasingly important for applications like game development, film animation, digital avatars, and embodied AI systems.

HY-Motion 1.0 is expected to reduce development time and costs for creators who previously relied on expensive motion-capture setups. With AI-generated motion, developers can create complex animations using simple prompts, opening new creative possibilities.

Why Tencent Is Open-Sourcing HY-Motion 1.0

The decision that Tencent open-source HY-Motion 1.0 aligns with the company’s broader AI strategy. Tencent has been investing heavily in foundational AI models, cloud computing, and developer ecosystems. Open-sourcing key technologies helps attract talent, build goodwill, and position Tencent as a leader in responsible and collaborative AI development.

It also allows Tencent to benefit indirectly, as improvements made by the open-source community can feed back into its own products and platforms.

Impact on Gaming, Metaverse, and Robotics

As Tencent open-source HY-Motion 1.0, the potential impact is especially strong in gaming and virtual environments. More realistic character movement enhances immersion, a critical factor for next-generation games and metaverse experiences.

Beyond entertainment, HY-Motion 1.0 could also support robotics research, where translating high-level commands into natural movement remains a major challenge. Researchers can experiment with motion planning and human-like movement using the open-source model as a foundation.

China’s Growing Role in Open-Source AI

The move where Tencent open-source HY-Motion 1.0 highlights China’s increasing participation in the global open-source AI ecosystem. While geopolitical tensions continue to shape technology policy, Chinese firms are increasingly releasing tools and models to gain international relevance and influence in AI standards.

Such initiatives help Chinese tech companies engage with global researchers and showcase domestic innovation on a worldwide stage.

Challenges and Responsibilities Ahead

Although Tencent open-source HY-Motion 1.0 brings many benefits, it also raises questions around responsible use. Motion-generation models can be misused for creating deepfakes or misleading content if not handled carefully.

Tencent has indicated that responsible AI use and proper guidelines will remain important as developers adopt and adapt the model.

Final Thoughts

The announcement that Tencent open-source HY-Motion 1.0 represents a meaningful contribution to the AI community. By lowering barriers to advanced motion-generation technology, Tencent is enabling creators, researchers, and startups to experiment and innovate at a faster pace.

As AI-driven animation and embodied intelligence continue to evolve, HY-Motion 1.0 could become a foundational building block for the next generation of digital experiences.