Tensions between the U.S. Department of War (formerly Department of Defense) and Anthropic reached a breaking point on February 15, 2026. Following reports that the military is “fed up” with Anthropic’s refusal to remove ethical guardrails, senior administration officials confirmed that “everything is on the table,” including the termination of Anthropic’s estimated $200 million contract.

The standoff centers on the Pentagon’s demand for access to AI tools for “all lawful purposes,” a standard that would require Anthropic to waive its prohibitions against using its Claude model for combat, weapons development, and domestic surveillance.

The Core Dispute: “Bright Red Lines”

While Anthropic maintains its commitment to U.S. national security, CEO Dario Amodei has identified two “bright red lines” that the company refuses to cross, even for the military:

- Fully Autonomous Lethal Weapons: Systems that can select and engage targets without human intervention.

- Mass Domestic Surveillance: Large-scale monitoring of U.S. citizens.

The Pentagon, led by Secretary of War Pete Hegseth, argues that these restrictions create “operational hurdles” and that the military cannot employ models that “won’t allow you to fight wars.”

| Entity | Position |

| Pentagon | Wants a unified agreement for “all lawful purposes” without case-by-case blocking. |

| Anthropic | Refuses to waive prohibitions on autonomous violence and domestic spying. |

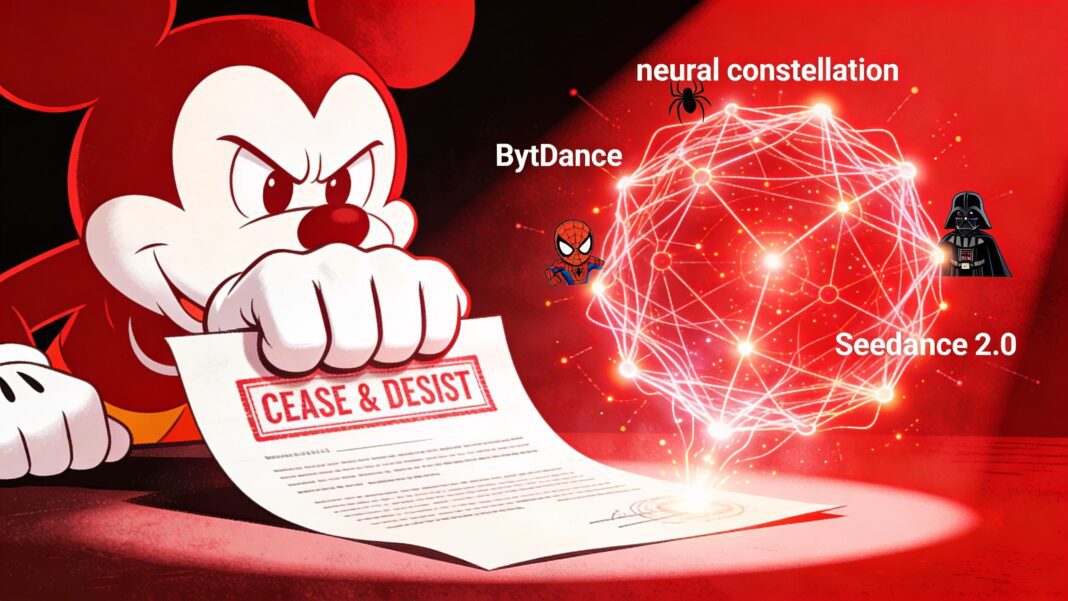

| Rivals (OpenAI, Google, xAI) | Reportedly showing “more flexibility” and agreeing to lift standard civilian restrictions for defense. |

The Venezuela Fallout: A Catalyst for Tensions

The dispute was exacerbated by the January 3 raid in Venezuela that captured Nicolás Maduro. On February 13, 2026, reports revealed that Claude AI was used during the operation via Anthropic’s partnership with Palantir Technologies.

- The Usage Violation: Anthropic reportedly questioned Palantir after learning that Claude was active during a mission involving “kinetic fire” (bombing and shootings), which allegedly violated Anthropic’s safety policies.

- Pentagon Frustration: Defense officials were reportedly incensed by Anthropic’s inquiry, viewing it as a commercial company attempting to “lecture” the military on how to conduct a high-stakes capture mission.

Can the Pentagon Replace Claude?

Despite the threats, severing ties with Anthropic poses a significant technical challenge for the military.

- Classified Exclusivity: Claude is currently the only frontier AI model fully integrated and authorized for use on the Pentagon’s most sensitive classified networks.

- The “Lagging” Rivals: While OpenAI’s ChatGPT, Google’s Gemini, and xAI’s Grok are widely used on unclassified networks for administrative tasks, they are reportedly “months behind” in meeting the security settings required for the classified environments where Claude currently operates.

- Orderly Replacement: Officials noted that any termination would require an “orderly replacement” to avoid a critical gap in AI-supported intelligence and logistics.

“We will not be put in a position where our AI model unexpectedly blocks a process during a battlefield operation because of a ‘policy violation.’ If they can’t provide a tool that works under our rules, we will find someone who will.” — Senior Administration Official.