On February 6, 2026, Nvidia (in collaboration with HKUST) unveiled DreamDojo, a pioneering “foundation world model” for general-purpose robotics.

While Nvidia’s earlier Cosmos models (released in January 2026) provided a base for physical AI, DreamDojo is specifically designed to solve the “data scarcity” problem in robotics by learning from human behavior rather than just robot teleoperation data.

What is DreamDojo?

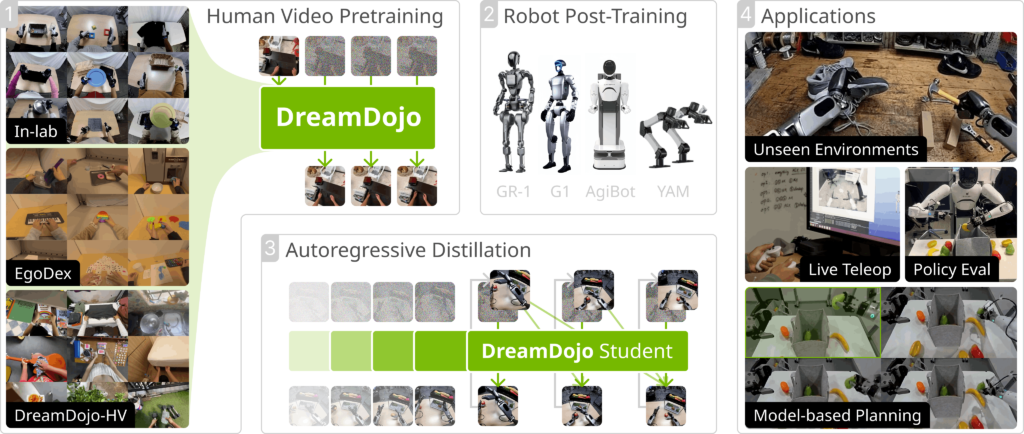

DreamDojo is a generative world model that allows robots to “dream” or simulate the outcomes of their actions in diverse, real-world environments. It is the first foundation model of its kind to demonstrate zero-shot generalization—meaning it can predict how to interact with objects and environments it has never seen before.

Key Technical Innovations

- Massive Human Video Training: Trained on 44,711 hours of egocentric (first-person) human video data (DreamDojo-HV). This dataset is 15x larger than any previous robotics dataset and covers 2,000x more unique scenes.

- Latent Action Modeling: Since human videos don’t come with “robot joint commands,” DreamDojo uses continuous latent actions as a proxy. It learns the “physics of intent” from humans and then translates that knowledge to specific robot hardware (like the GR-1 or G1 humanoids).

- Real-Time Simulation: Through a specialized distillation process, the model can generate physically accurate video rollouts at 10.81 FPS. This allows for “Live Teleoperation,” where a human operator can see the AI’s predicted outcome of a move before the robot actually executes it.

Why It Matters for Robotics

Traditional robot training requires thousands of hours of expensive, manual data collection for every new task. DreamDojo flips this script:

- Safety First: Robots can “test” a high-risk maneuver in the world model’s simulation before trying it in the physical world.

- Dexterity: The model excels at contact-rich tasks (like picking up a fragile egg or using a tool) because it has watched humans perform these tasks millions of times.

- Sim-to-Real Accuracy: It boasts a 0.995 correlation between its simulated success rates and actual real-world performance, making it a highly reliable “virtual proving ground.”

Comparison: DreamDojo vs. Cosmos Predict

| Feature | DreamDojo (Feb 2026) | Cosmos Predict 2.5 (Jan 2026) |

| Primary Data | 44k+ Hours Human Video | Mixed Physical AI Data |

| Specialization | Dexterous Manipulation | General World Prediction |

| Action Control | Fine-grained, action-conditioned | Mostly video-conditioned |

| Speed | 10.8 FPS (Real-time) | Variable |

Applications

- Policy Evaluation: Companies can run thousands of “virtual trials” to see if a robot’s software is ready for the real world.

- Model-Based Planning: The robot’s “brain” uses DreamDojo to visualize the next 30 seconds of a task to choose the safest path.

- Humanoid Training: It is already being used to accelerate the development of the GR00T N1.6 humanoid project.