A recent study conducted by Anthropic has sparked a major debate in the global tech community, suggesting that while AI coding assistants like Claude, GitHub Copilot, and ChatGPT can significantly boost speed, they may be unintentionally stalling the long-term growth of junior developers.

The research highlights a critical “cognitive trap”: developers who rely on AI to simply provide code without understanding the underlying logic eventually lose their ability to solve complex problems independently.

1. The “Copy-Paste” Paradox

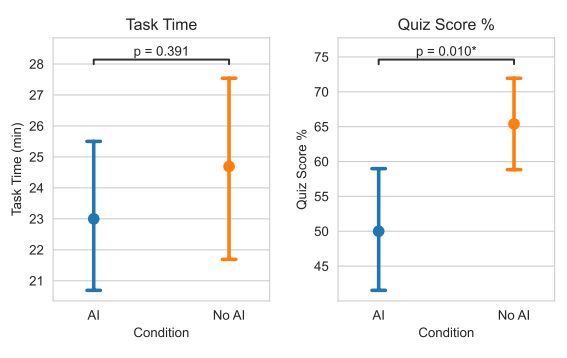

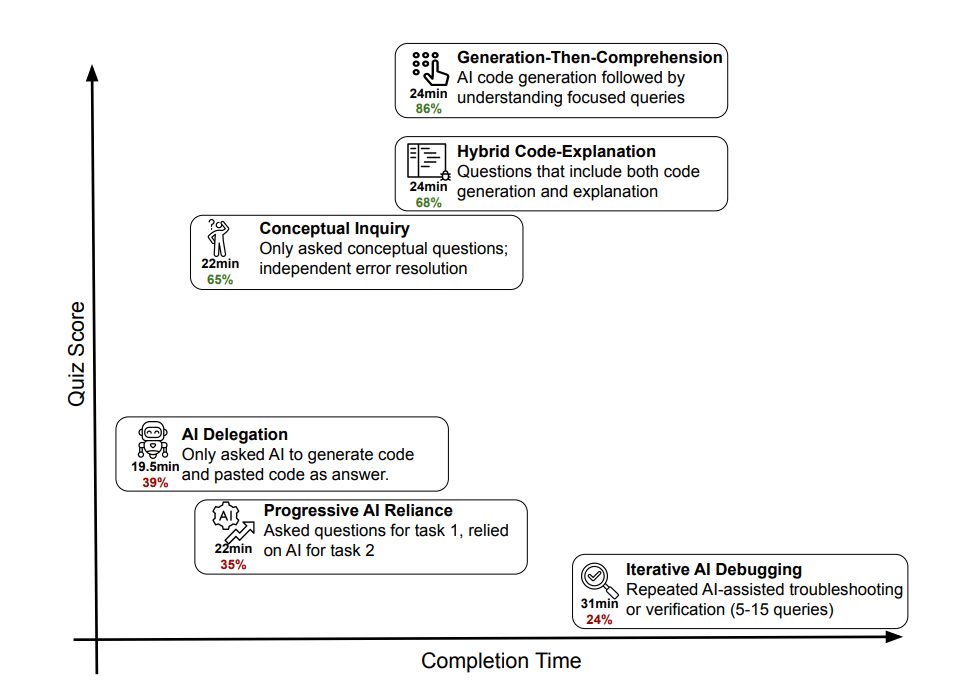

The study, which tracked hundreds of developers over a six-month period, identified a clear divide in how AI impacts professional growth.

- The Velocity Trap: Developers who used AI to generate “quick fixes” saw a 40% increase in short-term output but performed 25% worse on subsequent logic-based tests without AI assistance.

- Passive Learning vs. Active Inquiry: Anthropic researchers found that when the AI provides the “what” (the code), the human brain often skips the “how” (the logic). Over time, this results in “knowledge decay,” where the developer becomes a “prompt engineer” rather than a software architect.

2. The Solution: The “Ask Why” Protocol

The study doesn’t suggest abandoning AI tools. Instead, it advocates for a shift in user behavior. Anthropic found that developers who utilized the “Chain of Thought” method—specifically asking the AI to explain its reasoning—actually learned faster than those using no AI at all.

How to Use AI for Active Learning:

| Instead of Asking… | Ask This… |

| “Write a Python script for X.” | “How does this logic solve X, and what are the trade-offs?” |

| “Fix this bug.” | “Explain why this bug occurred and how the fix prevents it.” |

| “Refactor this function.” | “What design patterns are you using here and why?” |

3. Junior Developers at Risk

The most alarming finding of the study pertains to Entry-Level Engineers.

- The Seniority Gap: Senior developers use AI to automate “boilerplate” tasks they already understand. Junior developers, however, often use AI to solve problems they don’t yet understand, effectively bypassing the mental “struggle” required for neuroplasticity and skill retention.

- The “Black Box” Effect: If a junior developer cannot explain every line of code the AI wrote, they haven’t “written” the code; they have merely “curated” it.

4. Implications for Engineering Managers

In light of the study, major tech firms are reportedly adjusting their 2026 onboarding processes:

- Explainable AI Mandates: Some companies now require developers to include a “logic summary” in their pull requests (PRs) to prove they understand the AI-generated contributions.

- AI-Free Zones: Weekly “whiteboard sessions” or “blind coding” challenges are becoming common to ensure core problem-solving skills remain sharp.

- Prompt Literacy: Learning to prompt for explanation rather than execution is becoming a core competency in technical hiring.

Conclusion: AI as a Tutor, Not a Crutch

The Anthropic study serves as a vital reminder that in the age of generative AI, the value of a developer is shifting from output to insight. As AI models become more capable, the only way for humans to stay ahead is to treat the AI as a high-level tutor. By constantly asking “why,” developers can turn these tools into a superpower for learning, rather than a shortcut to obsolescence.