A surprising new research finding suggests that AI reasoning models often spend more computational effort on easy problems than on difficult ones, challenging common assumptions about how artificial intelligence processes complexity. The study highlights unexpected inefficiencies in modern reasoning-focused AI systems, raising questions about how these models allocate attention, logic steps, and compute resources.

The discovery has important implications for AI design, efficiency, and reliability—especially as reasoning models are increasingly used in science, coding, education, and decision-making tasks.

What the Study Found

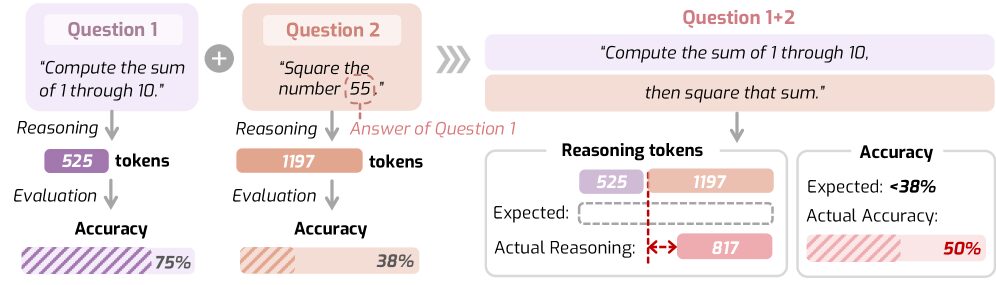

According to the study, AI reasoning models tend to “overthink” simpler questions, generating longer chains of reasoning and consuming more computation, while responding more quickly and shallowly to genuinely hard problems. Researchers observed that the models often failed to scale their reasoning depth appropriately with task difficulty.

In many cases, easy math or logic problems triggered extended internal reasoning steps, while complex or ambiguous questions received shorter, less detailed analysis.

Why AI Models Overthink Easy Tasks

Researchers believe this behavior stems from how reasoning models are trained. These systems are optimized to demonstrate visible reasoning—often called chain-of-thought—because such outputs are rewarded during training. As a result, the model may default to verbose reasoning even when it is unnecessary.

On harder problems, uncertainty or lack of clear patterns can cause the model to prematurely stop reasoning or jump to probabilistic guesses instead of fully exploring the problem space.

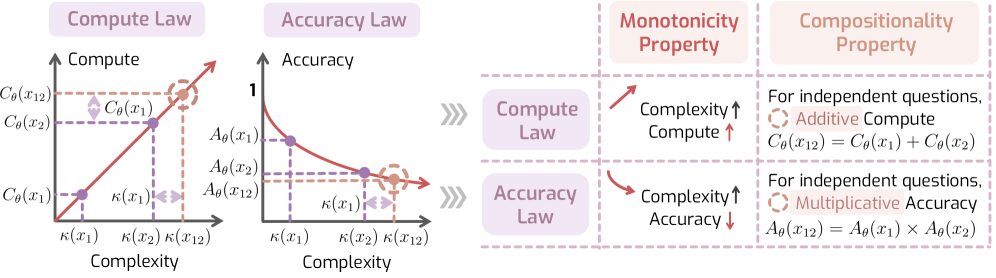

Misalignment Between Difficulty and Compute Use

The finding reveals a mismatch between problem difficulty and compute allocation. Ideally, AI systems should dynamically adjust how much effort they expend based on task complexity. Instead, current reasoning models appear to rely on surface cues—such as familiar formats or keywords—rather than true difficulty assessment.

This inefficiency can lead to wasted compute on trivial tasks and underperformance on genuinely complex ones.

Implications for AI Performance and Cost

As AI reasoning models become more widely deployed, inefficient compute usage has real-world consequences. Overthinking easy tasks increases latency and operating costs, especially in large-scale deployments such as tutoring systems, coding assistants, and enterprise decision tools.

At the same time, underthinking hard problems raises concerns about reliability, accuracy, and overconfidence in high-stakes applications.

Why This Matters for AI Safety and Trust

The fact that AI reasoning models think harder on easy problems than hard ones also has safety implications. Users may incorrectly assume that long, detailed explanations signal correctness, even when the model is confidently wrong on complex questions.

This behavior reinforces the need for better uncertainty estimation, adaptive reasoning depth, and transparency in how AI systems generate answers.

What Researchers Suggest as a Solution

Experts suggest several ways to address the issue. These include training models to better estimate task difficulty, dynamically adjusting reasoning depth, and separating reasoning quality from reasoning length during evaluation.

Another proposed approach is teaching models when not to reason extensively—recognising when a quick response is sufficient and when deeper analysis is truly required.

Broader Impact on Future AI Design

The study highlights a fundamental challenge in building truly intelligent systems: reasoning is not just about thinking more, but about thinking appropriately. As AI moves closer to human-like problem solving, efficient allocation of cognitive effort will become just as important as raw capability.

Future reasoning models may need meta-cognition—the ability to judge how much thinking a task deserves.

What This Means for Users Today

For now, users should remain cautious when interpreting AI-generated reasoning. Lengthy explanations do not guarantee correctness, and short answers are not always a sign of simplicity.

Understanding the limitations of reasoning models can help users apply AI tools more effectively and responsibly.

Conclusion

The finding that AI reasoning models think harder on easy problems than hard ones challenges intuitive ideas about artificial intelligence and exposes inefficiencies at the core of modern AI systems. It underscores that better reasoning is not about more computation, but smarter computation.

As researchers work to close this gap, the study serves as a reminder that even advanced AI still has much to learn about how—and when—to think.